How to create a digital supply chain to drastically improve manufacturing productivity

At the recent Moabcon 2012 conference in Salt Lake City, UT I had a chance to meet with many of the people in government, universities and private industry who run the most powerful computers in the world. The high performance computing or HPC space is doing quite well for a number of reasons including the need to process big data applications as well the fact that many universities are flush with cash and continue to see value in purchasing these super-powerful computers. The reason the universities have such large budgets for HPC has to do with the government providing virtually limitless student loans which in-turn has caused tuitions to skyrocket as well as more direct government stimulus funding.

One organization looking to take this massive computing potential and harness it to help boost the US and global economy is NCSA or National Center for Supercomputing Applications. Located at the University of Illinois at Urbana-Champaign, this 25-year-old organization regularly works with universities and government agencies to help them understand how they can partner more effectively with industry.

If you think of the HPC space as a fertile ground for innovation which doesn’t get commercialized you could equate it to Xerox Parc Labs – the organization responsible for many of the innovations we take for granted today. Xerox famously didn’t take full advantage of technologies like the GUI, mouse and vectorized printer language – it was left to others like Apple, Adobe and Microsoft to bring these innovations to the public.

NCSA is a bridge that gets innovations out of the lab and into industry – or in the above example, taking an invention like the GUI and point-and-click device and helping a company like Apple turn it into a Mac.

In fact they helped commercialize MOSAIC – the first Internet GUI, Apache server as well as helping Caterpillar’s modeling and simulation activities get started. Also, they helped Microsoft prototype its Windows HPC OS.

By partnering with companies through JVs and other means they have been able to help many in a number of industries but manufacturing is the organization’s sweet spot in-part because innovations are generally shared with other manufacturers and can be implemented across the industry. Contrast this to oil & gas or Wall Street where seismic modeling data and trading algorithms respectively are considered proprietary. So the goal of NCSA is to solve problems which can lift many boats at once.

Moreover manufacturing has been using HPC for years such as in the automotive space. There are even consortia of manufacturers working together to further subsets of the industry.

In my discussion with Merle Giles who heads the NCSA initiative he discussed how the resources in many companies are limited meaning their HPC solutions are spread to the point where many users are forced to work with only 8 or worse, 4 cores. In some cases such as designing a gear in an oil differential using 32 cores, the process can take four months or more.

But since modeling and simulation is generally given two weeks in the production-line system, these efforts won’t ever see the light of day.

The good news is that traditionally only three government agencies were in the business of HPC until recently; DoD, DoE and NSF. But more recently the Department of Commerce has gotten involved and as Giles says, “The White House actually gets it.”

He points out that NDEMC – national digital engineering & manufacturing consortium has signed a five-year MOU as a public-private partnership targeted at using advanced modeling and simulation in SMEs – particularly manufacturers in the supply chain.

Merle explained that in many companies – as described above, the distribution of cores is not proportionally greater for power-users meaning there are departments which need far more horsepower than thy have access to. In fact at one ISVs, he says, 90% of users have access to less than 9 cores and 40% have access to less than 5 cores.

He said, “What could be done if this same model could be run on more cores?” He further asked, “Is this a chicken and the egg syndrome?”

In order to assist the manufacturing world NCSA has developed iForge which is a purpose-built cluster to help business with HPC as a service. According to Giles, “We architected the machine to make Abacus scream.”

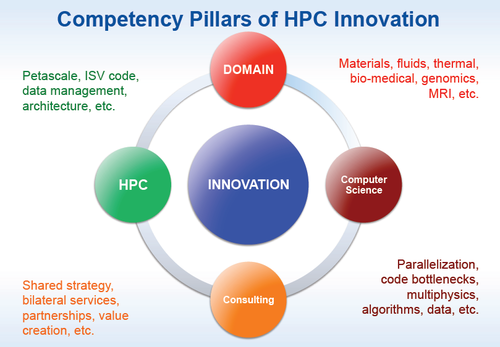

More importantly he points out that in order to get a working system you need to have collaboration between the computer science people, the HPC people and the domain experts. Moreover, you likely don’t want to have these people interacting with industry so it makes sense to have a consultant involved in the process as well.

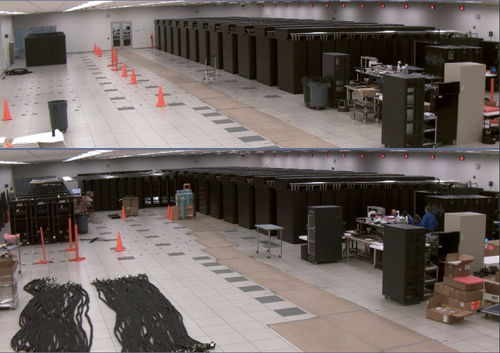

See the progress of Blue Waters construction live

Here is the latest picture of this machine being built from April 18, 2012

From there we went on to discuss Blue Waters, the first open access system tasked to achieve one petaflop per second or greater on a wide variety of applications. Nine target apps have to run at this speed on a sustained basis which roughly equates to a top theoretical speed of a blazing 10 petaflops. Moreover this system is targeted for 500 petabytes of data storage, making it the world’s largest computer in terms of storage and fastest based on sustained processing speed.

Some mind-numbing stats about this Cray adaptive supercomputer are as follows: It has at least 380 cabinets, more than 25,000 compute nodes, more than 17,000 spinning disks and more than 380,000 AMD cores. Another major milestone of this machine is it’s greater than 1.5 petabytes of aggregate system memory. A reminder that this is 1.5 million gigabytes or roughly93,000 times greater than a server with 16 Gb of RAM.

From there Giles discussed the future asking, what would happen if instead of supplier having to send parts like bottles by the trainload so a manufacturer can test them and suggest improvements, what would happen if we had a digital supply chain with 100 Gbps of bandwidth between the OEM and supplier. At that point he asked why we are spending so much money on rural bandwidth when where it is really needed is between major manufacturing centers and major industrial cities.

He continued to say, what we need is to introduce far more capability into that supply chain. And if we can do it for manufacturing, then we can do it for cancer and then we can do it for biology. From there he discussed NNMI and 3-D printing which is currently limited to plastic. He went on to say no one has done it with metal or titanium but that’s the demand. He suggested that we will need modeling and simulation to achieve 3-D metal printing.

He concluded that the HPC business can’t stay with HPC as usual as generally it is disconnected from the preprocessing challenges. He said the CAD and CAE business never grew up with parallel programming – it typically runs on a single core. He exclaimed, “We have our head in the sand with HPC. We don’t even ask what the on-ramp is or preprocessing or the off ramp which is visualization.” Moreover he said users care about cloud so we have to care about cloud and the access model needs to change. He said that the problem will take billions of dollars to solve and we need to work together – and if we don’t change the business model, we don’t get real work done.

The more I spoke with Giles the more I realized how much potential there is in the future to get some of the most powerful computers in the world to solve more real-world problems. The potential for better products and entirely new categories of drugs and other useful products is beyond our current comprehension. And if you think technology has changed the world immensely so far, just wait.