Very important guest blog post originally found on Techzone360.

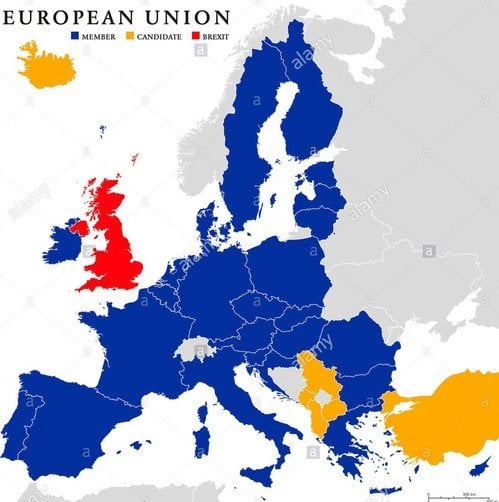

Last September, a U.K. House of Commons committee concluded that it is too soon to regulate artificial intelligence (AI). Its recommendation comes too late: The EU General Data Protection Regulation (GDPR), which comes into force next year, includes a right to obtain an explanation of decisions made by algorithms and a right to opt-out of some algorithmic decisions altogether. These regulations do little to help consumers, but they will slow down the development and use of AI in Europe by holding developers to a standard that is often unnecessary and infeasible.

Although the GDPR is designed to address the risk of companies making unfair decisions about individuals using algorithms, its rules will provide little benefit because other laws already protect their interests in this regard. For example, when it comes to a decision to fire a worker, laws already exist to require an explanation, even if AI is not used. In other cases where no explanation is required, such as refusing a loan, there is no compelling reason to require an explanation on the basis of whether the entity making the decision is a human or a machine. (Loan companies have to tell applicants what information their decisions are based on whether they use AI or not, but they do not have to explain the logic of their reasoning.)

AI detractors might suggest that an inability to explain some algorithms suggests that they should not be used. But the real problem here is not explaining algorithms, it is explaining correlation. This is hardly a new problem in science. Penicillin has been in mainstream use since the 1940s, but scientists are only now coming to understand why it has been so effective at curing infections. Nobody ever said we should leave infections untreated because we do not understand how antibiotics work. Today, AI systems can recommend drugs to treat diseases. Doctors may not understand why the software recommends a drug any better than why it might help; all that matters is they use their expertise to judge the safety of the treatment and monitor how it works.

So if transparency is not the answer, what is? Monitoring behavior is a far better way to maintain accountability and control for undesirable outcomes—and again, this is true regardless of whether a decision is being made by a human or algorithm. For example, to combat bias, transparency can only ensure that an algorithm disregards ethnic markers like skin color, but that would not even come close to covering all the subtle characteristics that are used as bases for racism, and which differ tremendously throughout the world. A better approach is to look for evidence of bias over time and make adjustments as necessary.

In short, policymakers should create technology-neutral rules to avoid unnecessarily distorting the market by favoring human decisions over algorithmic ones. If a decision needs an explanation, this should be so regardless of whether technology is used to arrive at that decision. And if algorithms that cannot be easily explained consistently make better decisions in certain areas, then policymakers should not require an explanation. Similarly, the need for regulators to monitor outcomes is independent of how decisions are made.

Unfortunately, when the GDPR comes into force throughout the EU in May 2018, it will impose unnecessary restraints on AI and stifle many social and economic benefits. The regulation should be amended before that happens. And if EU policymakers refuse to revisit the issue, member states should use national legislation to establish reasonable alternatives for when AI cannot produce a legible explanation and provide legal authorization for the most important uses of AI, such as self-driving cars.

Nick Wallace (@NickDelNorte) is a Brussels-based senior policy analyst with the Center for Data Innovation, a data policy think tank.